Bad Actors Capitalize on Simple Website Flaws

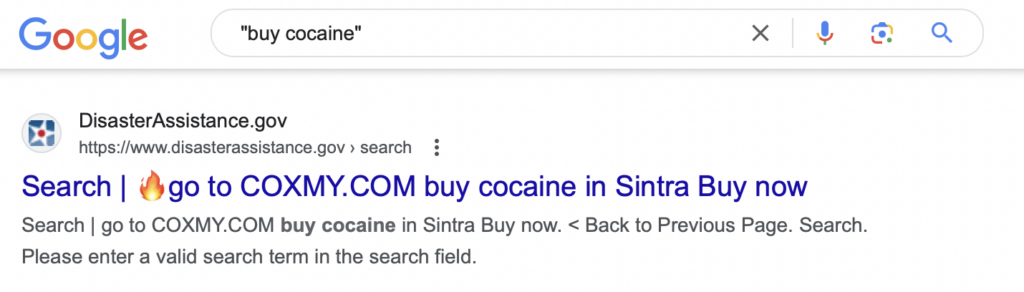

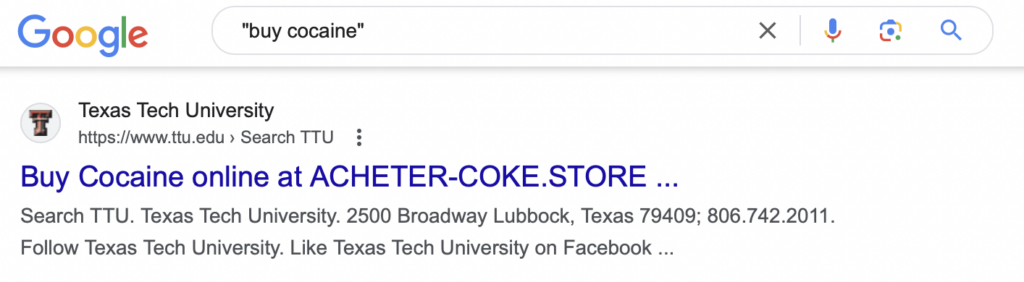

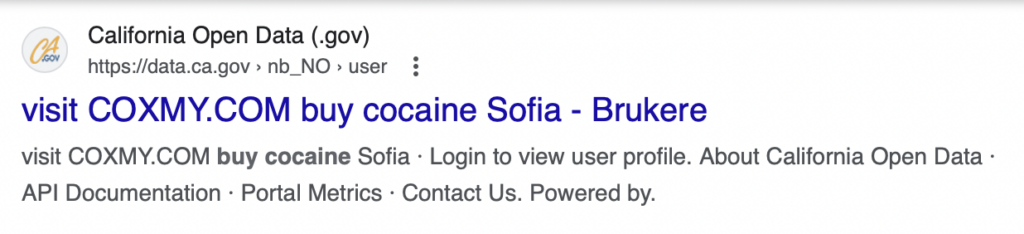

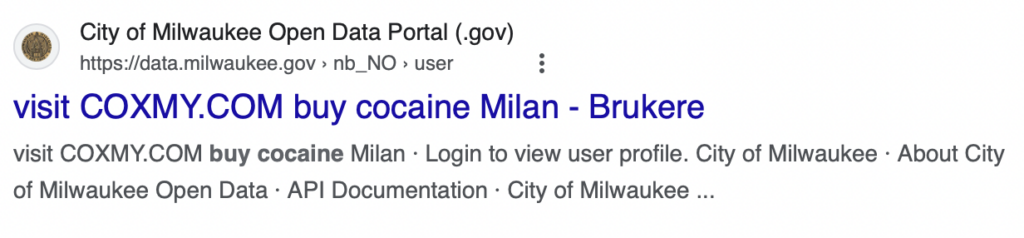

Believe it or not, U.S. government and university websites are being used to promote the sale of illegal drugs, and Google is letting it happen!

Bad actors have figured out a clever way to capitalize upon the strong reputation of US government (.gov) and school (.edu) websites. On sites with certain vulnerabilities, this simple trick, which I will demonstrate for you below, allows a user to inject any text they want into a website’s Google search results.

Government and University Websites Targeted for Exploitation

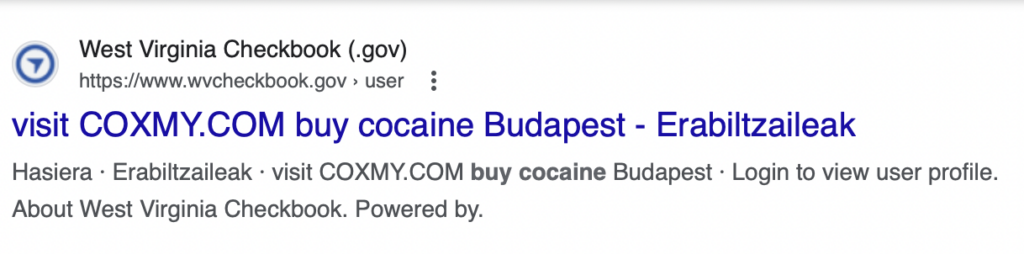

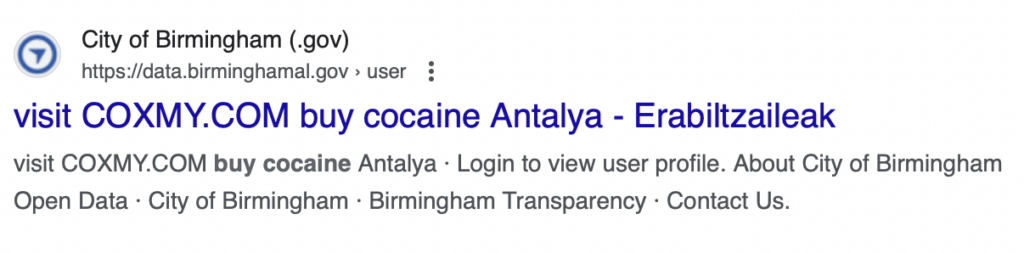

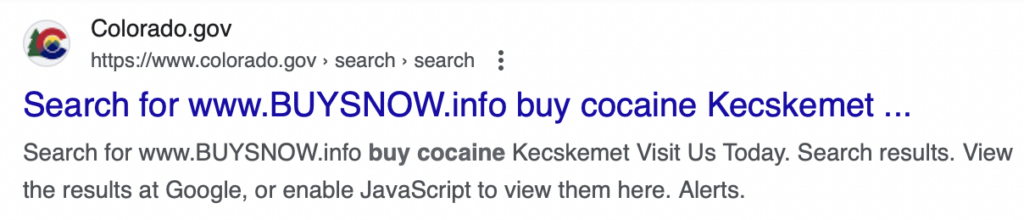

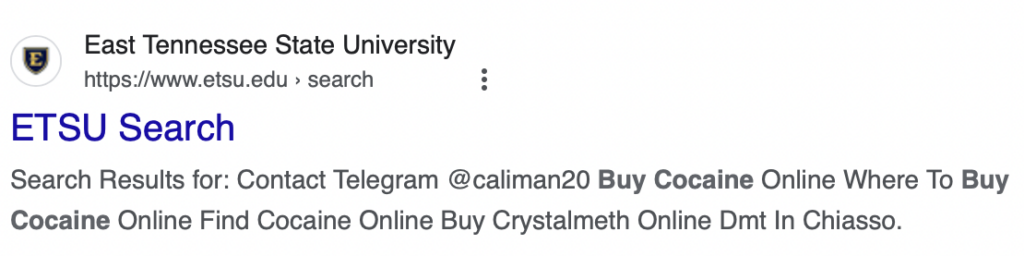

This issue is actively present, as of today, October 16, 2024, on various government and university sites, including:

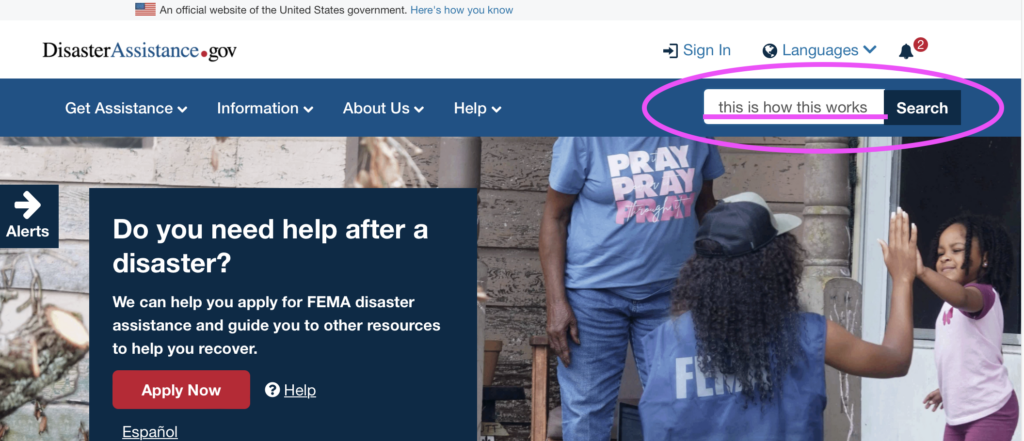

- FEMA Disaster Assistance – Apply for Federal Aid After a Disaster – DisasterAssistance.gov

- West Virginia Checkbook – Financial Transparency for WV Government – WVCheckBook.gov

- California Open Data Portal – Public Data from the State of California – Data.ca.gov

- Official State Website of Colorado – State Services and Resources – Colorado.gov

- City of Birmingham Open Data Portal – Access to Birmingham’s Public Data – data.birminghamal.gov

- City of Milwaukee Open Data Portal – Public Data and Transparency – data.milwaukee.gov

- Marquette University – Private Catholic University in Milwaukee, WI – Marquette.edu

- East Tennessee State University – Public University in Johnson City, TN – Etsu.edu

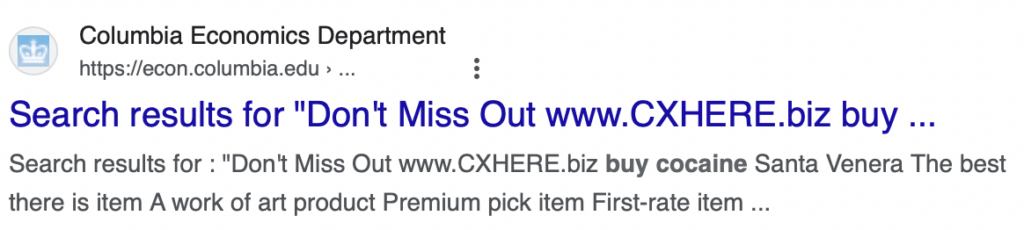

- Columbia University Economics Department – Academic Programs and Research – Econ.columbia.edu

- Texas Tech University – Public Research University in Lubbock, TX – TTU.edu

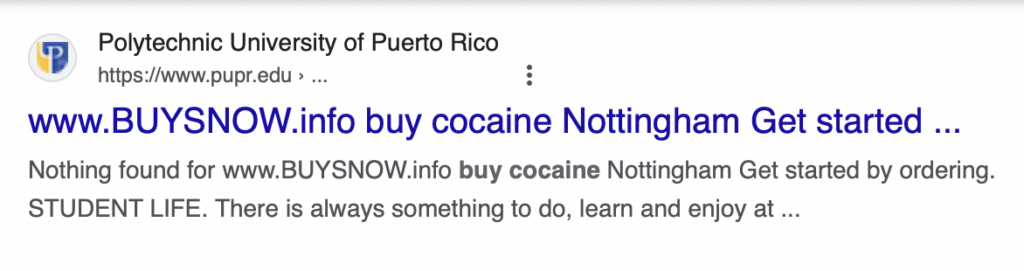

- Polytechnic University of Puerto Rico – Private Engineering and Technical University – pupr.edu

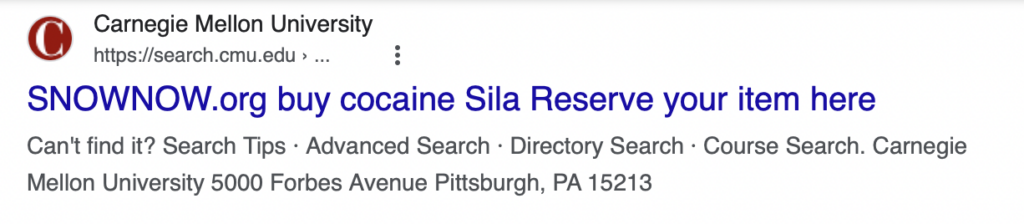

- Carnegie Mellon University – Private Research University in Pittsburgh, PA – cmu.edu

Those are just a few examples of this wildly rampant issue. I could have sat here all day collecting more, but I think I made my point.

How the Exploitation Works

This exploitation does not involve traditional hacking. Instead, it manipulates the search box on websites to achieve harmful outcomes.

This is a form of SEO poisoning, a term used to describe a variety of tactics that manipulate search engine results to promote or execute malicious activities.

There are various forms of SEO poisoning, but the two most pertinent to this particular issue are Reflected Search Poisoning (RSP) and Internal Site Search Abuse Promotion (ISAP).

What is Reflected Search Poisoning (RSP)?

Reflected Search Poisoning (RSP) manipulates URLs, specifically search query parameters, to include illicit content, which is then indexed by search engines.

These specific cases seem to utilize a specific sub-type of this tactic, Internal Site Search Abuse Promotion (ISAP).

What is Internal Site Search Abuse Promotion (ISAP)?

Internal Site Search Abuse Promotion (ISAP) can occur when the internal “site search” feature of a website generates URLs that are indexable by search engines. This means that anything that a user types into a search box on the website can possibly end up getting indexed in Google and similar search engines.

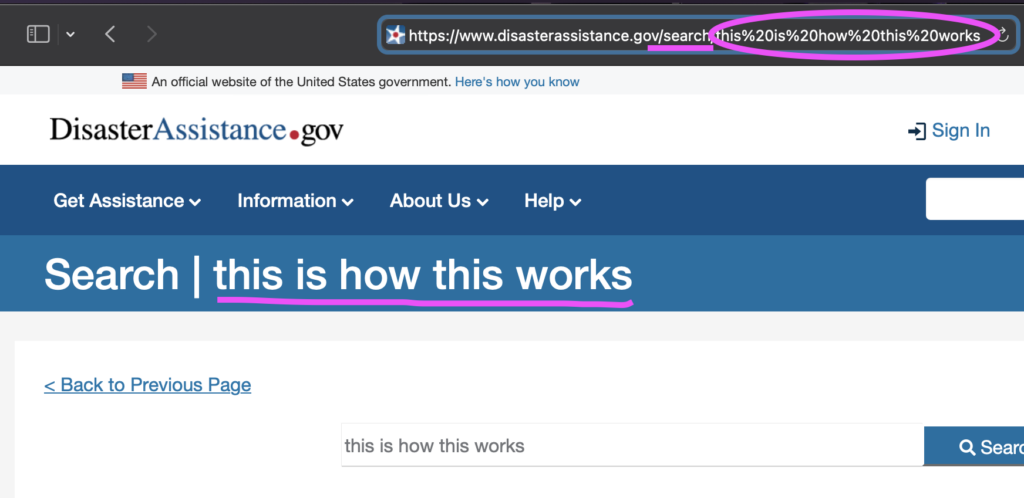

For example, when I type “this is how this works” into DisasterAssistance.gov:

It generates a URL related to my query:

This generation of URLs, in and of itself, is not uncommon. What makes these particular sites vulnerable is the lack of basic SEO best practices such as canonicalizing, disallowing, or noindexing these types of URLs (in English: blocking them from search engines).

Bad actors take advantage of this by submitting large volumes of searches to a website’s internal search engine. These searches contain malicious advertisements or instructions, such as “buy cocaine.”

What Vulnerabilities Are Exploited in ISAP Poisoning?

As crazy as these words may sound to someone who doesn’t work in the SEO (Search Engine Optimization) industry, these are actually some of the most basic 101-level best practices:

- Canonicalization: If your site can’t help but generate duplicate or unintended URLs, the best practice is to use a canonical tag in the website code to tell search engines only to index the root of the page.

- For example, site.com/search?search_term=A and site.com/search?search_term=B should both have a canonical tag pointing back to site.com/search

- Robots.txt File: A properly configured robots.txt file can also help avoid this issue.

- Continuing with the example above, site.com/robots.txt should have a “disallow” directive for the /search directory to exclude search results pages.

- “Noindex” Tags: Lastly, a webmaster can outright block a URL (and its parametered versions) from being indexed by search engines with a “noindex” tag.

It’s kind of crazy to me to even call these vulnerabilities, but without them properly set, malicious actors can create URLs with nefarious search terms, making them appear as legitimate content hosted on trusted government or school domains.

How New is ISAP Poisoning?

The exploitation of search functions for illicit promotion has been ongoing for several years, with different studies highlighting the persistence and scale of this issue:

- In 2019, a report titled “Into the Dark: Unveiling Internal Site Search Abused for Black Hat SEO“ seems to have coined the term. The report revealed that public websites, including educational and government domains, were being exploited using ISAP.

- In 2023, an article on Synthical titled “Reflected Search Poisoning for Illicit Promotion“ described how RSP allowed attackers to exploit the reputation of high-ranking websites.

- The most recent study, titled “Reflected Search Poisoning for Illicit Promotion“ (2024), found that over 11 million distinct Illicit Promotion Texts (IPTs) were generated, affecting tens of thousands of websites and major search engines like Google and Bing.

How To Prevent Internal Site Search Abuse

To prevent the misuse of official websites’ internal site search functions, a combination of technical and security measures should be implemented:

Technical Measures

- Canonical Tags: Use canonical tags to ensure that only the main pages are indexed, preventing unnecessary duplication.

- Robots.txt File: Properly configure the robots.txt file to block search engines from indexing pages not intended for public consumption.

- Noindex Meta Tags: Add “noindex” meta tags to search result pages to prevent them from appearing in search engine results.

- Regular Monitoring and Updates: Regularly review the website’s search console and monitor indexed content to identify unusual activity early on and take corrective actions.

Security Measures

- Input Validation and Sanitization: Validate and sanitize all input included in search query parameters to reduce the risk of malicious content being embedded in URLs.

- Secure URL Generation: Avoid reflecting raw user input directly into URLs. Implement secure URL generation methods to prevent harmful content from being inserted into links.

- Server-Side Logging and Anomaly Detection: Implement server-side logging to track searches and use anomaly detection to identify unusual activity. Early detection of misuse can help mitigate potential exploits before they escalate.

Why Google Should Be Smarter About This

Despite Google’s nauseatingly repetitious claims that they consistently make algorithm updates to battle spam in search results, they still haven’t figured out how (or cared) to address this dangerous type of exploitation in their search engine.

With all of the company’s investment in developing artificial intelligence (including buying nuclear reactors to power it), you would think Google would be smart enough by now to catch this extremely simple form of search spam.

The fact that illicit content from government and university domains is being indexed shows that Google’s algorithms simply aren’t being intentionally applied to catch this specific type of SEO abuse.

Sadly, I’m not surprised. Google’s search result quality has been falling apart for many years now because they just simply don’t care about organic search results anymore.

Why The Government Should Be Smarter About This

Drugs aren’t the only thing that could possibly be promoted with this incredibly simple technique – porn, malware, scams, etc. are all commonly promoted topics in SEO poisoning.

This easy-enough-for-a-five-year-old-to-do tactic could also be used to spread fake news and propaganda messages – all seemingly “endorsed” by a university or government agency.

I informed FEMA today that this vulnerability is currently compromising their DisasterAssistance.gov site. FEMA’s disaster recovery efforts are currently the focus of intense misinformation campaigns, so hopefully they get this fixed soon.

Need Help with Your Search Engine Results?

I have 20 years of experience driving traffic to websites and have owned an SEO agency for 13 years. If you’re having trouble with your SEO results, contact me to set up a consultation.

- How to Spot a “Black Hat” SEO/GEO Scam in 2026 - January 9, 2026

- Google is Helping U.S. Government Sites Advertise the Sale of Illegal Drugs - October 16, 2024

- Google Has Been Ruled a Monopoly in Antitrust Lawsuit (Video) - August 9, 2024